Hey {{first_name | there}},

Anthropic just spent millions on a Super Bowl ad that subtly shades OpenAI.

The message? “Ads are coming to AI. But not to Claude.”

This isn’t just marketing theater. It’s a fight over what AI chatbots become: advertising platforms or tools you can trust.

The way AI companies make money will directly shape how these tools behave when you use them.that actually does work for you.

Let’s get into all of that. But first, here are some tool recs for today:

🛠️ AI Tools Worth Checking Out

Voxtral Transcribe 2 by Mistral: An open-source speech-to-text model that runs locally on consumer hardware.

Image to Prompt Generator: Upload any image and get the prompt used to generate or recreate the same image with AI.

LullMe: AI-powered personalized meditations generated based on how you’re feeling in the moment.

Jobbyo AI: Helps automate and personalize job applications, making the search process faster and less repetitive.

The Super Bowl Ad That Got Sam Altman Angry

Anthropic’s Super Bowl campaign showed scenarios where AI assistants interrupt users mid-conversation with awkward, intrusive ads. One spot featured a guy asking about his mother, only to get hit with a cougar dating ad.

The tagline: “Claude is a space to think.”

The subtext: ChatGPT is becoming an ad platform.

And it worked. The ads got massive attention. They positioned Claude as the “clean” alternative while OpenAI becomes the company that monetizes your conversations.

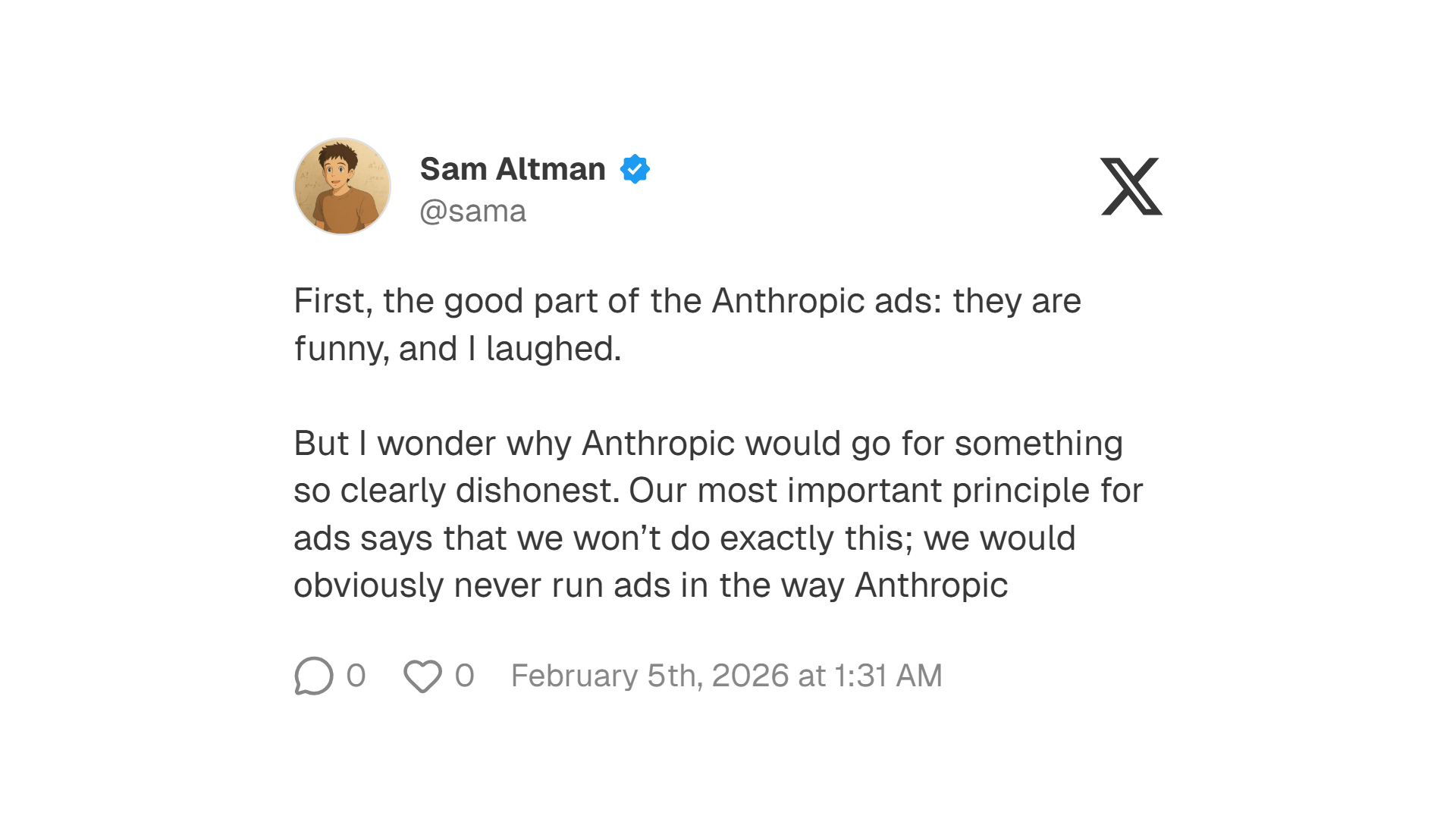

Sam Altman shot back on X, calling the ads “dishonest” and claiming OpenAI would “obviously never run ads in the way Anthropic depicts them.”

But here’s the thing: OpenAI already said ads are coming to ChatGPT’s free and low-cost tiers as sponsored links next to responses.

Altman says ads won’t influence answers. Anthropic’s bet is that users won’t trust that promise.

Why This Actually Matters

On one side: the advertising model. Free to use, supported by ads, potentially billions of users. OpenAI serves 800+ million people. Only 5% pay. That gap has to be monetized.

On the other side: the subscription model. Pay for access, no ads, theoretically better alignment with user interests. Anthropic is betting people will pay to keep their AI interactions clean.

Anthropic said: “We want Claude to act unambiguously in our users’ interests.”

That’s a direct shot at OpenAI’s incentive structure.

Why this matters: Incentives shape behavior. If ads pay the bills, engagement becomes the goal. If subscriptions pay, satisfaction and trust matter more.

The Tool That Broke The Market

Now coming onto what happened on Tuesday:

Anthropic released a legal plugin for Claude - basically an AI assistant that can review contracts, track compliance, and handle document analysis.

Seems reasonable, right?

Not if you're a software company. Within hours, the selloff began.

Legal software stocks tanked. LegalZoom, Thomson Reuters, RELX - all down. But it didn't stop there.

The panic spread to the entire software sector. By end of day, $285 billion had evaporated from software, financial services, and asset management stocks.

Salesforce dropped 7%, bringing its 2026 decline to 26%. Intuit fell 11%. The IGV software ETF entered a bear market - down 22% from its highs

One plugin. One day. $285 billion gone.

But here's what nobody's talking about - this wasn't the first warning shot.

The Layoff Blame Game

Now let's talk about the human cost.

Amazon announced 16,000 corporate job cuts. Pinterest and Dow cited AI automation as the reason for their layoffs.

Pinterest was "the most explicit in asserting that AI" drove the cuts. The CEO pointed directly at AI efficiency gains.

But here's where it gets dark.

Pinterest employees didn't know who got laid off. The company kept it quiet. So several engineers built an internal tool - a script that tracked which colleagues had disappeared from internal systems.

Last Friday, Pinterest fired those engineers for "violating company policy".

Read that again. A company blames AI for layoffs, refuses to be transparent about who was affected, then fires the engineers who tried to figure it out.

The BBC, CNBC, CBS all covered it. The irony is suffocating.

Meanwhile, Amazon's 16,000 cuts led to headlines like "Amazon axes jobs as companies keep replacing workers with AI" But economists say "it can be hard to know if AI is the real reason behind the layoffs or if it's the message a company wants to tell Wall Street"

Companies are using AI as cover for restructuring. Wall Street loves the AI story. Employees pay the price.

NVIDIA’s Jensen Says Everyone’s Wrong

While software stocks were crashing Tuesday, Jensen Huang showed up at Cisco's AI Summit with a completely different message.

He called fears that AI will replace software "the most illogical thing in the world".

"There's this notion that the software industry is in decline and will be replaced by AI," Huang said. "It is the most illogical thing in the world, and time will prove itself".

His argument? AI will rely on existing software rather than rebuild basic tools from scratch.

He even compared demanding ROI from AI to "forcing a child to make money before you teach them to read".

But here's the problem - $285 billion in market value disagrees with him. And the investors who just walked away from a $100 billion deal with OpenAI? They seem to disagree too.

One Year Into “Vibe Coding” The Big Skill Shift

While companies and markets argue, builders are going through their own transformation.

“Vibe coding” has exploded over time. Non-engineers are launching apps. Founders are prototyping in days.

But a year in, developers are noticing a pattern:

Projects built entirely through prompting often start fast… and then become messy, fragile, and hard to scale.

Common issues:

AI forgets dependencies

Code gets duplicated

Features pile up without structure

No one really understands the system

The gap is widening: experienced developers using AI are becoming dramatically faster, while beginners who rely only on prompts struggle once something breaks.

AI lowers the barrier to start building. It does not remove the need to understand what you built.

The winning approach looks like this:

Use AI for speed → Then refactor, clean up, and add human structure.

Vibe coding is a launchpad, not a long-term architecture.

What This Means for You

Here’s the big picture:

AI is getting more powerful. Companies are racing to monetize it. Markets are swinging wildly. Workers are feeling the pressure. Builders are moving faster than ever.

But underneath all of it is one core tension:

Speed vs sustainability.

Fast growth, fast automation, fast prototypes, but slower conversations about trust, incentives, job impact, and long-term quality.

As a user, builder, or professional, your edge isn’t just using AI.

It’s understanding:

Who pays for the tool

What incentives that creates

Where AI actually helps vs where it creates hidden risks

When to move fast — and when to slow down and add structure

The future of AI won’t just be decided by models.

It’ll be decided by the people who use them thoughtfully instead of blindly.

PS: Would you tolerate ads in ChatGPT for free access or switch to a paid, ad-free alternative? Hit reply and tell me.

— Aashish

6 AI Predictions That Will Redefine CX in 2026

2026 is the inflection point for customer experience.

AI agents are becoming infrastructure — not experiments — and the teams that win will be the ones that design for reliability, scale, and real-world complexity.

This guide breaks down six shifts reshaping CX, from agentic systems to AI operations, and what enterprise leaders need to change now to stay ahead.